Searching for extraterrestrial life on icy moons and getting a better understanding of the emergence of life are the key motivations behind the TRIPLE Projects.

The acronym TRIPLE stands for Technologies for Rapid Ice Penetration and subglacial Lake Exploration and constists of multiple bespoke projects dedicated to the ambitious goals of the challenging demands of a deep sea and space mission.

In the iMarEx project, the use of both classical AI and machine and deep learning in different areas of maritime exploration is being researched and tested. In this context, AI concepts are developed, tested, and finally implemented on robotic watercraft using the example of surface vehicles (wave glider, catamaran). The concrete task is for a surface vehicle to autonomously locate the source of a liquid outflow, e.g., a water body with high turbidity or pollutants, and drive to the location of the outflow to enable further actions such as sampling or investigation.

Valles Marineris Explorer 3 - Robust Ground Exploration

Fördermittelgeber: The national aeronautics and space research centre, Federal Ministry for Economic Affairs and Climate Action

Webseite: https://www.vamex.space/

Laufzeit: 2023 - 2025

Kooperationspartner: University of Bremen – High-Performance Visualization, Institute of Communications and Navigation, FAU Lehrstuhl für Hochfrequenztechnik, DFKI Bremen

The goal of the VaMEx initiative is to develop an autonomous, heterogeneous robot swarm capable of cooperatively exploring an assigned mission area. Of particular interest is the perspective exploration of the Valles Marineris canyon on Mars. In this area, conditions for extraterrestrial life are favored by elevated atmospheric pressure. Therefore, this area on Mars will be explored by an autonomous robotic swarm in the future.

A central element of the robotic swarm consists of ground-based rovers and a walking robot Crex. These units and their algorithms are developed within the VaMEx3 Robust Ground Exploration (VaMEx3-RGE) project. Our Cognitive Neuroinformatics group is responsible for implementing a comprehensive solution for multi-robot navigation. One focus is on the adaptation and extension of the system and approaches developed in VaMEx-CoSMIC (DLR-KN), VaMEx-VIPE (DFK-RIC) and Kanaria-K2I (University of Bremen) as well as their integration into the overall VaMEx3 system. On the algorithmic side, this concerns autonomous navigation methods that enable the cooperative behavior of multiple heterogeneous entities. This includes approaches from the areas of autonomous navigation, multi-robot multi-sensor data fusion, and distributed and cooperative high-level planning. Furthermore, a new system for simultaneous position determination and map generation in combination with vectorial velocity estimation based on millimeter-wave radar sensors will be explored and integrated into the overall system.

In summary, VaMEx3-RGE enables ground-based swarm elements to move to areas of the exploration area and perform various tasks at the target location. Autonomous exploration of a test area in Germany is performed at the end of VaMEx3-RGE using heterogeneous drones and rovers.

Publikationen:

Sorry, no results for this queryAI algorithms for autonomous lawnmowers

Funding: Die Senatorin für Wirtschaft, Arbeit und Europa der freien Hansestadt Bremen

Website: -

Project period: 2021-2022

Cooperation partner: TOPA³S, Alpha Robotics

In this multi-disciplinary project, a lawn mower robot is enhanced. Its task it is to autonomously navigate and mow very large areas of grass. For this purpose, AI algorithms of autonomous driving and space travel are transferred and implemented onto the robot. In particular our work group designs a high-precision localization by means of a multi-sensor fusion. In this regard, measurements of a RTK-GNSS receiver, an IMU, and other sensors are probabillistically fused in a Kalman filter. The project partners also implement a trajectory planner, which avoids obstacles and no-go zones, as well as a engine control algorithm for the robot.

PRORETA ist eine interdisziplinäre Forschungskooperation zwischen Universitäten und Continental AG. Das Ziel dieser Kooperation ist die Entwicklung von Fahrerassistenzsystemen (ADAS) und Funktionen für autonomes Fahren (AD), um Verkehrunfälle zu verhindern.

PRORETA ist eine interdisziplinäre Forschungskooperation zwischen Universitäten und Continental AG. Das Ziel dieser Kooperation ist die Entwicklung von Fahrerassistenzsystemen (ADAS) und Funktionen für autonomes Fahren (AD), um Verkehrunfälle zu verhindern.

Odometry in urban environments

Funding: Federal Ministry for Economic Affairs and Energy

Website: @CITY

Project period: 2019-2021

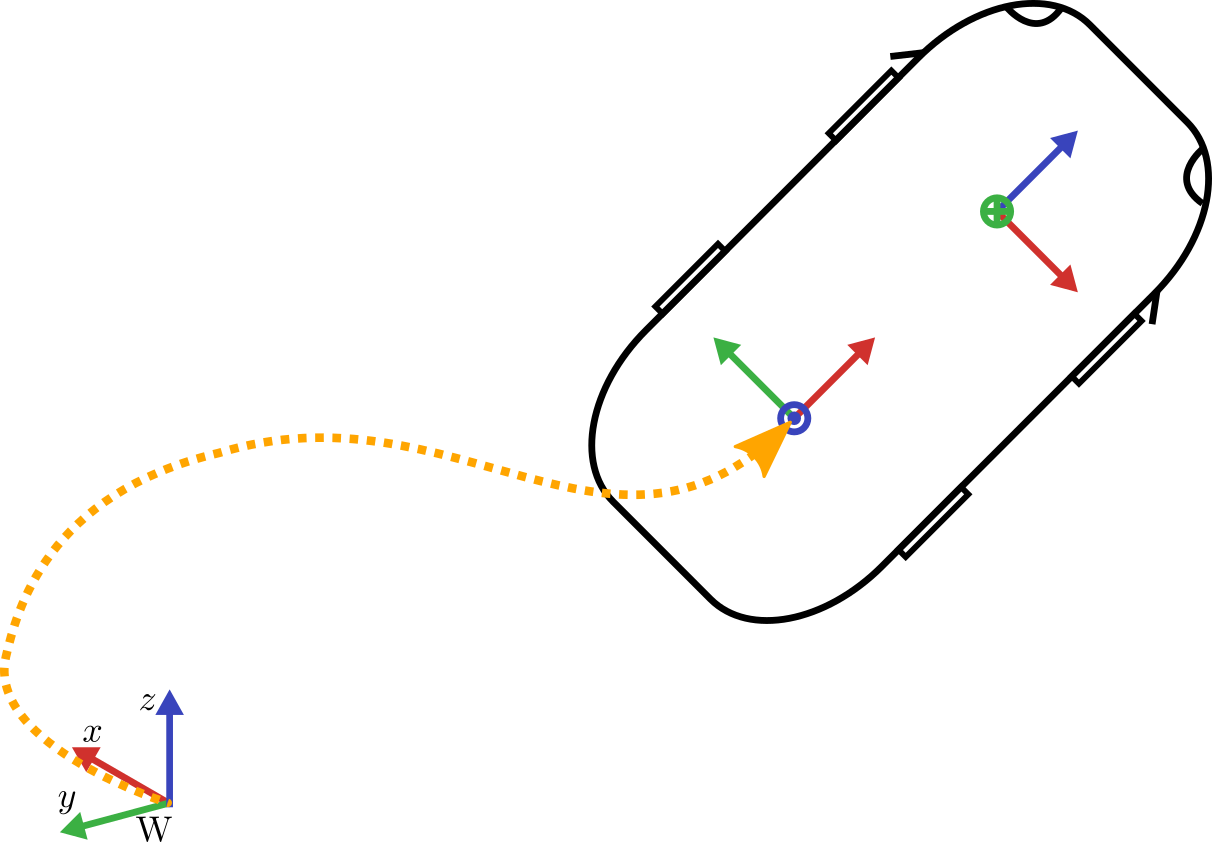

Cooperation partner: Continental AG

A crucial part of autonomous driving is the precise determination of the vehicle’s odometry. In contrast to the absolute localization via GNSS-methods, the odometry only calculates the vehicle’s relative motion with respect to the starting point. The position and orientation as well as the velocity are used in several algorithms of autonomous driving. In this project, different methods are investigated to achieve a high-frequency and jump-free odometry via a multi-sensor-fusion. A special focus is on the usage of the inertial measurement unit (IMU) and the image stream of a camera.

The final odometry algorithm uses the IMU and the camera in a so called loosely coupled fashion. In addition measurements of the steering wheel and vehicle speed are integrated by means of certain motion models. All the sensor information is fused in an Unscented Kalman Filter (UKF) to output a final odometry result.

Publikationen:

Filter publications: | |

|---|---|

| 2022 | |

| [3] | Visual-Inertial Odometry aided by Speed and Steering Angle Measurements (), In 25th International Conference on Information Fusion (FUSION), IEEE, 2022. |

| 2021 | |

| [2] | Visual-Multi-Sensor Odometry with Application in Autonomous Driving (), In 93rd IEEE Vehicular Technology Conference (VTC2021-Spring), IEEE, 2021. |

| 2020 | |

| [1] | Kalman Filter with Moving Reference for Jump-Free, Multi-Sensor Odometry with Application in Autonomous Driving (), In 23rd International Conference on Information Fusion (FUSION), IEEE, 2020. |

We have 124 guests and no members online